Tencent's Hunyuan-A13B: A Smart Approach to Efficient Large Language Models

Beyond the parameter race: How Tencent built a 13B model that punches above its weight

The AI world has been obsessed with bigger and bigger models, but Tencent's new Hunyuan-A13B takes a refreshingly different approach. Instead of just throwing more parameters at the problem, they've built something that's actually practical to use while still delivering impressive performance. After diving into the technical details, I think this model represents a smart evolution in how we think about AI efficiency.

The Smart Architecture Behind the Performance

Here's what makes Hunyuan-A13B genuinely interesting: it uses a Mixture-of-Experts (MoE) architecture with 80 billion total parameters, but only activates 13 billion during inference⁴⁵. This isn't just a clever engineering trick—it's a fundamental rethinking of how to balance performance with resource efficiency.

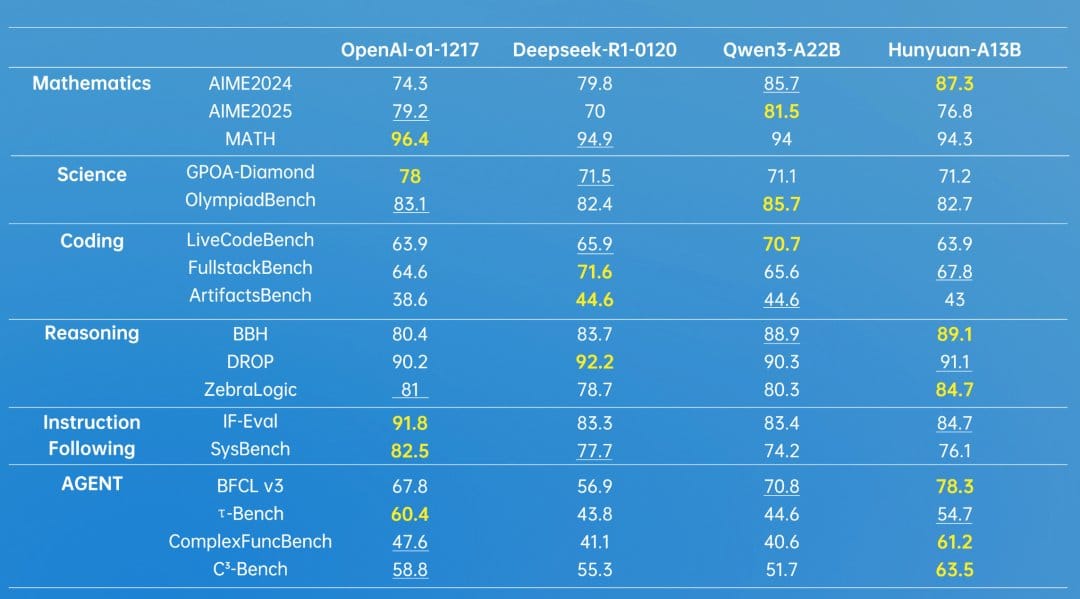

The model demonstrates highly competitive performance across multiple benchmarks, particularly excelling in mathematics, science, coding, and reasoning tasks¹²⁴. What impressed me most is its state-of-the-art agentic performance across various benchmarks like BFCL-v3 and Ä-Bench⁶⁵.

Dual-Mode Reasoning: Fast When You Need It, Deep When You Want It

One of the standout features is the dual-mode reasoning capability. Users can toggle between "slow-thinking" and "fast-thinking" modes, giving you the flexibility to enable or disable chain-of-thought reasoning depending on what you're trying to accomplish¹².

This isn't just a gimmick—it addresses a real problem in AI applications. Sometimes you need quick, direct answers. Other times, you want the model to work through complex problems step by step. Having that choice built into the architecture is genuinely useful.

Training That Actually Makes Sense

The training process behind Hunyuan-A13B shows real thoughtfulness. They started with pretraining on 20 trillion tokens, followed by fast annealing and long-context adaptation⁵. This extensive curriculum ensures the model is well-prepared for diverse applications while maintaining efficiency.

The approach includes progressively augmented data training to better align with target domain distributions, improving texture representation and detail synthesis¹¹. It's not just about feeding the model more data—it's about feeding it the right data in the right way.

Real-World Applications Across Industries

What sets Hunyuan-A13B apart is its versatility across different sectors. This isn't just another chatbot—it's designed to handle serious enterprise applications¹³¹⁴.

Healthcare and Professional Services

The model shows particular promise in healthcare, financial services, manufacturing, and government sectors⁹¹⁰. Its ability to handle complex reasoning tasks while maintaining efficiency makes it suitable for clinical decision support and operational optimization.

AI Research and Development

Researchers and developers are using Hunyuan-A13B to build custom AI tools that enhance agents with machine learning and statistical analysis. The model's capabilities facilitate real advancements in data analysis and automation¹⁵, particularly on platforms like GitHub where collaborative development thrives.

Natural Language Processing Excellence

The model excels in commonsense understanding and reasoning, along with classical NLP tasks like question answering and reading comprehension¹. Its implementation in clinical solutions, supporting professionals with evidence-based tools, highlights its application in specialized fields¹⁹.

Easy Implementation for Developers

One thing I really appreciate is how developer-friendly the implementation is. You can integrate Hunyuan-A13B using the standard transformers library, with clear code snippets provided for loading the model, applying reasoning modes, and managing inference operations¹².

This practical approach to accessibility is crucial. Too many powerful models end up being academic curiosities because they're too difficult to actually use. Tencent has clearly thought about the developer experience here.

Structured Understanding and Advanced Capabilities

The model demonstrates significant advancements in structured captioning, generating JSON-formatted descriptions that enhance understanding of content by providing multi-dimensional descriptive information¹⁸³. This capability improves the quality of outputs in generative applications.

The integration of neural scaling laws has been pivotal in the development process, allowing researchers to optimize performance through better understanding of relationships between model size, dataset size, and computational resources¹⁸.

Community-Driven Development Philosophy

What stands out about Hunyuan-A13B's development is its emphasis on community collaboration and adaptability⁸. This isn't just corporate speak—it reflects a genuine understanding that the most successful AI tools evolve with their user communities.

Future initiatives focus on refining the model to meet evolving market demands while ensuring broader compatibility across various industry verticals. The goal is to facilitate economic growth and technological advancements across different sectors⁸.

The Reality Check: Performance vs. Practicality

Let's be honest about what this model represents. Hunyuan-A13B isn't trying to be the biggest or most powerful model out there. Instead, it's optimized for practical deployment scenarios where you need solid performance without requiring massive computational resources.

The 13 billion active parameters during inference make it deployable in environments where larger models would be impractical, while the 80 billion total parameters in the MoE architecture ensure it doesn't sacrifice capability for efficiency⁴⁵.

Industry feedback suggests this balance is hitting the mark. Users report significant improvements in workflow efficiency while maintaining the quality needed for professional applications³.

Looking Forward: Sustainable AI Development

The approach behind Hunyuan-A13B suggests a maturing understanding of what makes AI useful in practice. Rather than chasing ever-larger parameter counts, the focus is on sustainable performance that can be broadly deployed.

Future developments are expected to emphasize:

- Enhanced cross-industry compatibility for diverse digital operations

- Improved resource efficiency for wider accessibility

- Community-driven feature development responding to real user needs

This philosophy of sustainable scaling could influence how the industry approaches model development going forward⁸.

The Bottom Line

Hunyuan-A13B represents a thoughtful approach to large language model development that prioritizes practical utility over raw scale. While other companies chase headline-grabbing parameter counts, Tencent has built something that's actually usable in real-world scenarios without sacrificing performance.

What impresses me most is the balance between capability and accessibility. The dual-mode reasoning, efficient architecture, and developer-friendly implementation suggest this was built by people who understand both the technology and the practical challenges of deploying AI in production environments.

The AI landscape is full of impressive demos that never quite work in practice. Hunyuan-A13B feels different—like a tool built for the long term, designed to be genuinely useful rather than just technically impressive.

For developers and organizations looking for a capable language model that won't break the bank or require a data center to run, Hunyuan-A13B deserves serious consideration. It's not the flashiest option available, but it might just be the smartest.

References

[1] Tencent-Hunyuan/Tencent-Hunyuan-Large - GitHub

[2] HunyuanCustom: A Multimodal-Driven Architecture for Customized Video Generation

[3] The Architectural Imagination | Harvard University

[5] Hunyuan-A13B released : r/LocalLLaMA - Reddit

[6] Daily Papers - Hugging Face

[7] Tencent Unveils Hunyuan-A13B: Efficient 13B Active Parameter Model - NVIDIA

[8] Boosting Precise Sea Acquisition Strategies with Light-weighted Deep Learning

[9] Tencent Open Sources Hunyuan-A13B: An AI Model with Advanced Capabilities - AIbase

[10] Tencent-Hunyuan/Hunyuan-A13B - GitHub

[11] Tencent has open-sourced Hunyuan-A13B, a Mixture-of-Experts Model - YouTube

[12] Tencent Hunyuan Updates: Focusing on Both Multimodality and Efficiency - 36Kr

[13] Tencent Open Sources Hunyuan-A13B - Facebook

[14] HunyuanVideo: A Systematic Framework For Large Video Generation - GitHub

[15] Oracle Health

[16] Tencent HunYuan: Building a High-Performance Inference System - NVIDIA

[17] tencent/HunyuanVideo-I2V - Hugging Face

[18] HunyuanVideo: A Systematic Framework For Large Video Generation - arXiv

[19] UpToDate: Trusted, evidence-based solutions for modern healthcare

[20] What are the application scenarios of Tencent Hunyuan Model?